EXIST 2024 Challenge

Overview

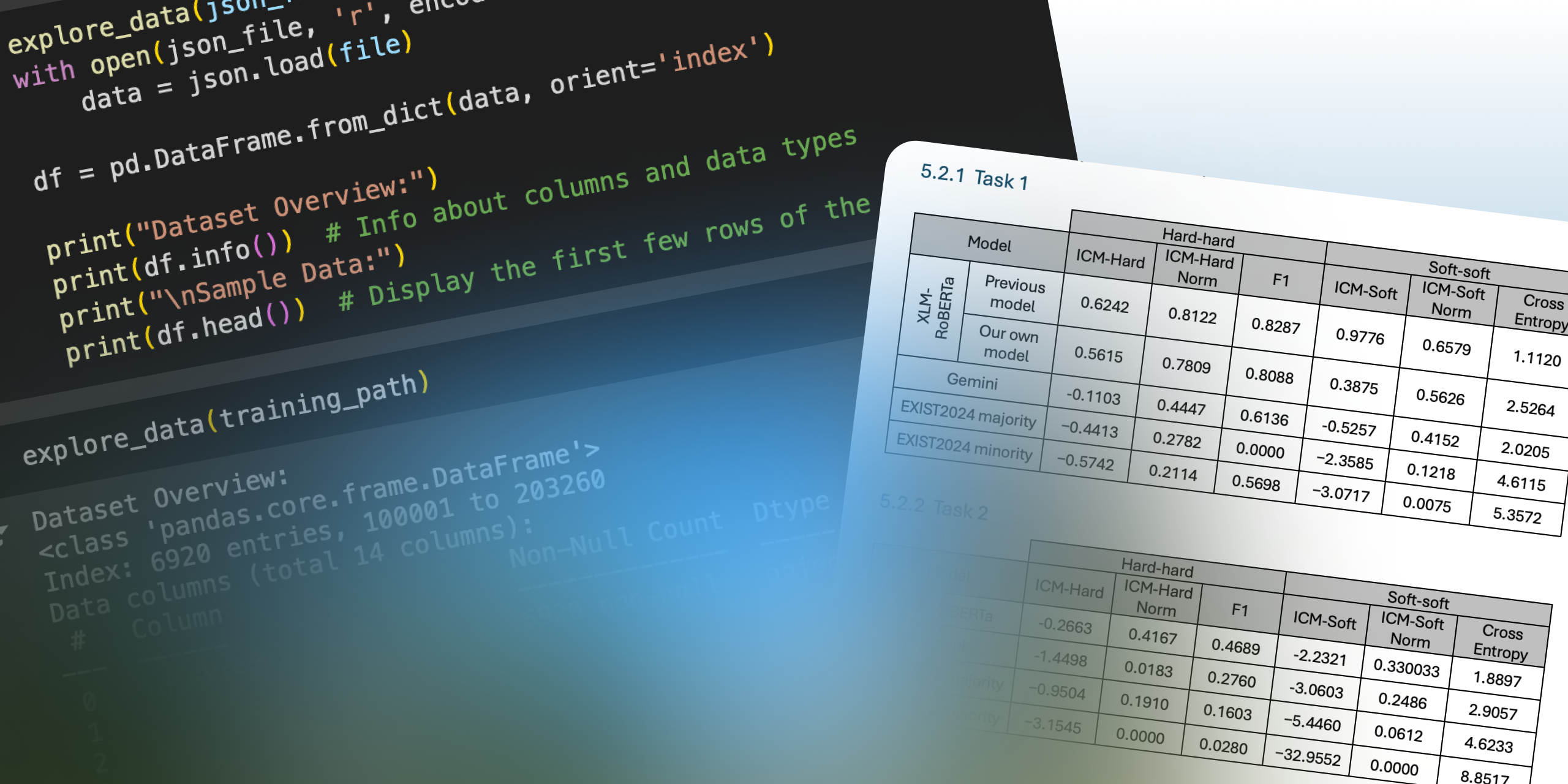

The EXIST challenge seeks to advance research on sexism detection through two core challenges: sexism identification and intention classification. The first challenge involves determining whether a given text contains sexist content, while the second deals with the motivations behind such expressions.

Learn more about EXISTTo address these tasks, we leverage state-of-the-art natural language processing techniques, including fine-tuning the XLM-RoBERTa model and applying zero-shot learning with Gemini.

Our approach

Modifications have been made to the implementation of solutions proposed by some of the competition participants. In particular, two distinct classification models are proposed.

The first employs a fine-tuned version of RoBERTa, while the second utilises Google's Gemini for zero-shot classification. Both solutions demonstrate convincing performance in both single-label and multi-label classification.

The code for our project is freely available at:

https://github.com/lorenzocal/exist2024Fine-tuning an XLM-RoBERTa model

The Hugging Face Transformers framework provides APIs and tools to download and train state-of-the-art pretrained models.

We used the XLMRobertaForSequenceClassification class which is an XLM-RoBERTa transformer with a sequence classification/regression head on top (a linear layer on top of the pooled output).

Preprocessing input datasets

The Hugging Face API for training the transformer model requires data to be in a certain format. This means that in order to pass it to the model for fine-tuning, the data must be pre-processed.

Two major steps had to be done:

- 1. Tokenizing the text using the pretrained XLM-Roberta model

- 2. Mapping each example from JSON to a dictionary: {“input_ids”, “label”} Where input_ids is a list of XLM-RoBERTa tokenizer’s representation for each word in a tweet, and label is the label assigned to each example: 1 for “YES”, 0 for “NO”.